Modular Lifelong Reinforcement Learning via Neural Composition

International Conference on Learning Representations (ICLR), 2022

PDF Code

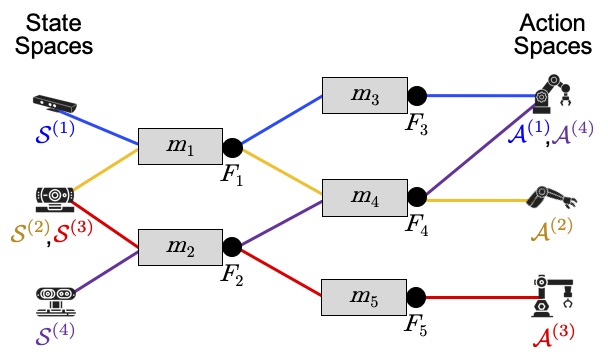

Abstract: Humans commonly solve complex problems by decomposing them into easier subproblems and then combining the subproblem solutions. This type of compositional reasoning permits reuse of the subproblem solutions when tackling future tasks that share part of the underlying compositional structure. In a continual or lifelong reinforcement learning (RL) setting, this ability to decompose knowledge into reusable components would enable agents to quickly learn new RL tasks by leveraging accumulated compositional structures. We explore a particular form of composition based on neural modules and present a set of RL problems that intuitively admit compositional solutions. Empirically, we demonstrate that neural composition indeed captures the underlying structure of this space of problems. We further propose a compositional lifelong RL method that leverages accumulated neural components to accelerate the learning of future tasks while retaining performance on previous tasks via off-line RL over replayed experiences.

Recommended citation:

@inproceedings{mendez2022modular,

title={Modular Lifelong Reinforcement Learning via Neural Composition},

author={Mendez-Mendez, Jorge and van Seijen, Harm and Eaton, Eric},

booktitle={10th International Conference on Learning Representations (ICLR-22)},

year={2022}

}