Transfer Learning via Minimizing the Performance Gap Between Domains

Advances in Neural Information Processing Systems (NeurIPS), 2019

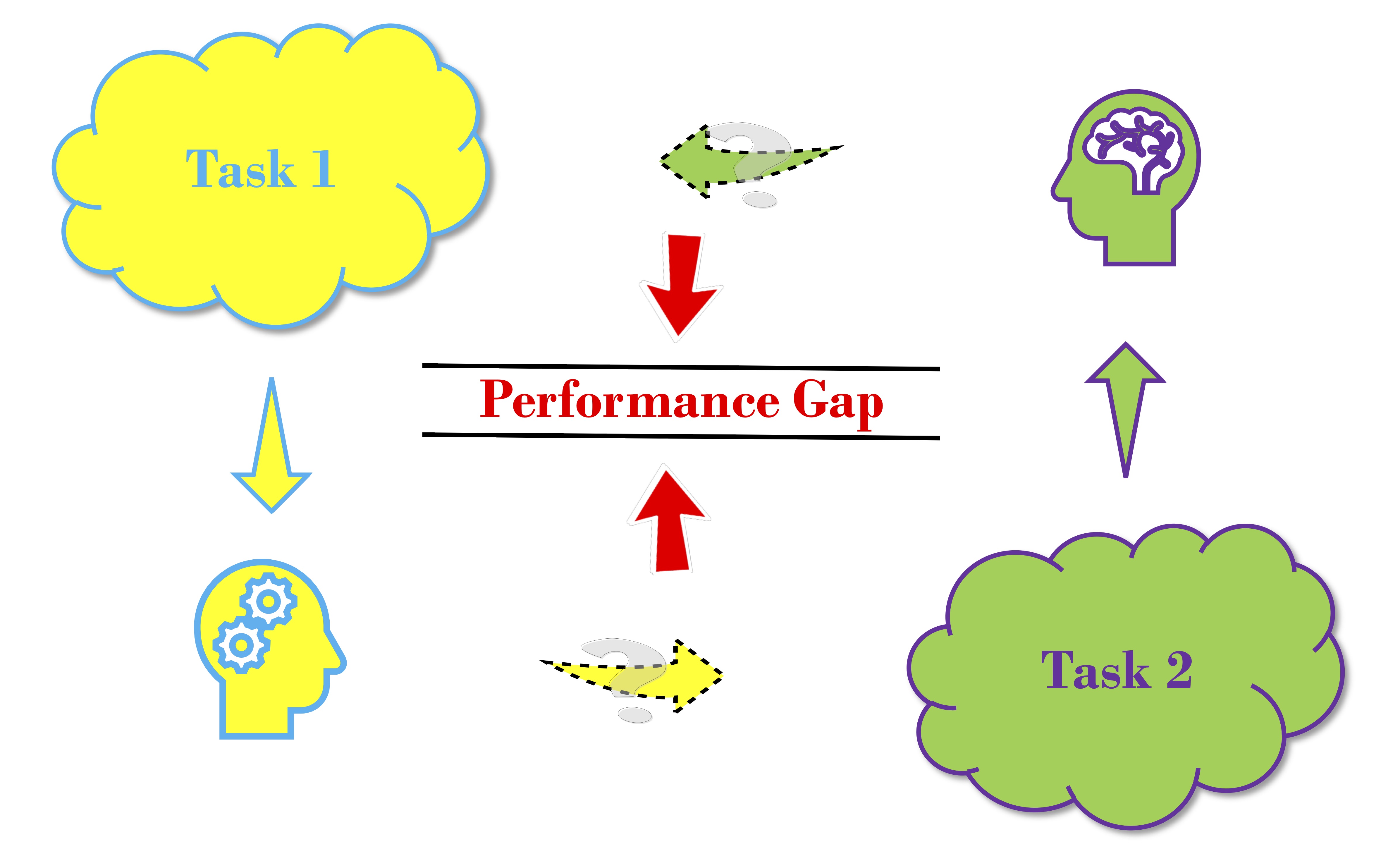

PDF Code Poster

Abstract: We propose a new principle for transfer learning, based on a straightforward intuition: if two domains are similar to each other, the model trained on one domain should also perform well on the other domain, and vice versa. To formalize this intuition, we define the performance gap as a measure of the discrepancy between the source and target domains. We derive generalization bounds for the instance weighting approach to transfer learning, showing that the performance gap can be viewed as an algorithm-dependent regularizer, which controls the model complexity. Our theoretical analysis provides new insight into transfer learning and motivates a set of general, principled rules for designing new instance weighting schemes for transfer learning. These rules lead to gapBoost, a novel and principled boosting approach for transfer learning. Our experimental evaluation on benchmark data sets shows that gapBoost significantly outperforms previous boosting-based transfer learning algorithms.

Recommended citation:

@inproceedings{wang2019transfer,

author = {Wang, Boyu and Mendez-Mendez, Jorge and Cai, Mingbo and Eaton, Eric},

booktitle = {Advances in Neural Information Processing Systems 32 (NeurIPS)},

title = {Transfer Learning via Minimizing the Performance Gap Between Domains},

year = {2019}

}